Building trust in any new technology takes time—especially in legal practice, where accuracy and professional responsibility are central. As artificial intelligence becomes part of legal workflows, the question is no longer whether the technology has potential, but whether legal professionals can rely on it.

At the same time, many tools are not more than 2-3 years old. So, do we believe that AI will provide us with high value in the future? Can we trust the crystal ball predictions? That’s an insecurity, which for now, can only be answered by statistics and the impact on the legal sector, which we have already experienced to an extent. The fact that the technology is still relatively new, and immature, can be a barrier to trust and therefore also adoption.

For AI to be used with confidence, lawyers need clarity on how it fits into their work, how it protects sensitive information, and how it supports—not replaces—their role. Trust is not created by technology alone; it is built through transparency, accountability, and thoughtful implementation.

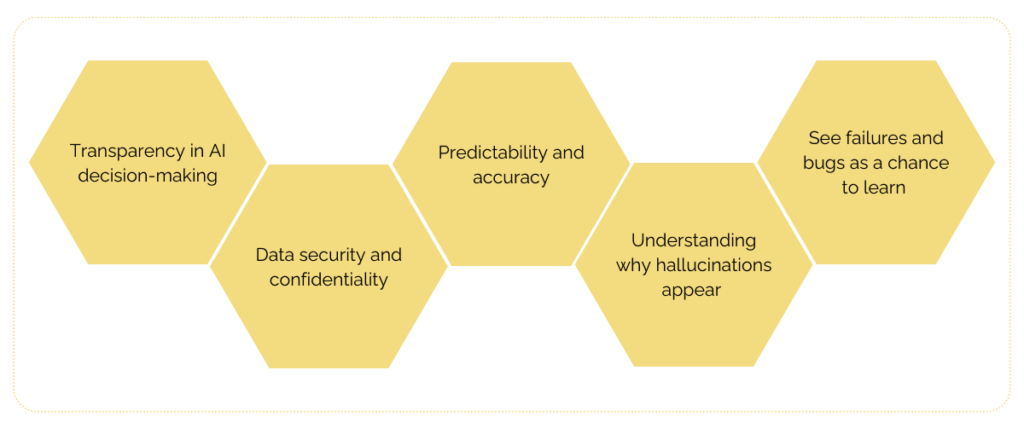

Key considerations for trustworthy AI adoption

Adopting AI in legal practice requires more than just selecting a technology provider. Trust is built through transparency, reliability, and alignment with professional standards. Several factors influence whether AI tools will be accepted and integrated into daily legal work:

Transparency in AI decision-making

AI should not function as a black box. Legal professionals need to understand how decisions are made, what data is used, and whether outputs can be explained or verified. Systems that provide reasoning, cite sources, and allow for human oversight foster greater confidence in their use.

Data security and confidentiality

Law firms handle sensitive client information and must ensure that AI tools align with strict confidentiality requirements. This includes clear policies on data storage, jurisdictional compliance, and ensuring that legal documents are not used for AI model training without explicit consent. It is vital to create trust.

Predictability and accuracy

AI adoption increases when legal professionals can rely on consistent outputs. Variability in AI-generated content—such as differences in contract analysis or risk assessment—can reduce confidence. Firms should evaluate AI tools through structured testing and validation before widespread implementation.

Understanding why hallucinations appear

It is important to be open and humble on the issues related to AI-hallucination, but also remember, that hallucinations are a “side effect” of how the models are constructed and a “feature” in their ability to create the responses. It’s in their nature to try to create a convincing answer, when they do not have an answer to your instruction. To build trust in AI despite hallucinations, it can help to see AI as a new colleague—an intern who needs guidance on how to assist you with tasks and whom you need to get to know.

See failures and bugs as a chance to learn

When experiencing AI as a new colleague, be aware of the fact that a new colleague also makes mistakes (as we all do), just as AI sometimes hallucinates. It is therefore vital to create a learning-culture and a mindset, where “failing” as a person or as a technology encourages experimentation rather than fear of making mistakes. That will lead to higher use of AI in everyday work and in the long run minimize decreased trust as a barrier to adoption.

The importance of thorough user adoption in building trust and optimizing the potential of AI

Legal AI adoption is not just about the technology—it is also about how professionals engage with it. Training, onboarding, and continuous support play a significant role in determining whether AI tools are seen as reliable or disruptive.

At Saga, Saga Amplify is designed to address these challenges by providing structured adoption programs tailored to law firms. By focusing on the people and combining hands-on training and guidance in how to work effectively together with the AI technology or your new colleague, firms can introduce AI in a way that builds trust rather than resistance.

Effective AI adoption programs should:

Focus on practical applications rather than theoretical use cases.

Provide clear guidelines on best practices and limitations of AI tools.

Clarify how to avoid hallucinations while not being afraid of them occurring. Fear of hallucination is a vital barrier to adoption, which also reduces trust if not addressed correctly.

Offer ongoing training and support to ensure that legal professionals remain good experiences with the technology and adopt new functionalities regarding the high-evolving technology.

Looking ahead

AI adoption in the legal sector will continue to expand, but trust remains the foundation for success. Firms that prioritize transparency, security, and user engagement will see higher acceptance of AI tools and greater long-term value.

For legal professionals, the goal is not to replace expertise but to enhance it—allowing AI to support decision-making while maintaining the principles that define legal practice. Adopting AI is not a one-off implementation – it’s a change in mindset.